Update: Why GenAI Is Now Freaking Me Out

Not for it’s so-called intelligence, but for the human intentions in the program

Yesterday I described a new test to see if machines have achieved consciousness: Can it ask me an interesting question? You can read my reasoning behind that idea in yesterday’s post.

I then tested out this idea in the current generative Artificial Systems, which are powered by Large Language Models and advanced statistical analysis, the basis for the path many genAI vendors say will lead to machine cognition. To recap:

Google Gemini:

OpenAI:

Perplexity:

Yep, every one of them provided the exact same answer. A shocking result, given such an open-ended question.

A little while later, TNCS reader Susanna Camp asked the same question of Deepseek, another GenAI service:

Then, a reader who requested anonymity asked the same question of their company’s internal genAI. Same result.

Now we’re five for five. Different training models, different software, same outcome. Huh?

On Facebook, my friend Paul Boutin posted the results of asking this question from Cursor, a genAI software editor. Which means, he said, “this is the same question asked not about me, but about the code it is working on with me.” Software interrogating software. Have a look:

Essentially, it’s the same “what else would you like to be/have” answer.

So, six LLMs, six identical answers. You couldn’t get that if you asked anything more open-ended than “Who was America’s first president?” Even then, there might be some variance from hallucinations.

This is extraordinary. What does it suggest about LLMs? I have no definitive answer, but a few guesses.

-Maybe there’s a website out there where this question is rated as a great conversational opener at parties (it’s not1), and that idea has been reposted a lot. The LLM builders have all crawled this concept a bunch of times, so it’s a collective standard. That seems unlikely.

-The systems are designed to perpetuate conversation, to increase time of engagement, and thereby both user trust and increased knowledge of a person. Useful for improving the LLM, and selling people stuff later. Defensible corporate reason.

-It’s programmed to believe everyone feels a lack, and wants to be something more. A safe bet, but a little dark.

-Paul said, “Maybe it's an inside secret of LLM developers that every interaction is based on appealing to the user's assumed human dream of some aspirational superpower.” Which does explain the global popularity of The Marvel Comics Universe, in particular among people who build LLMs.

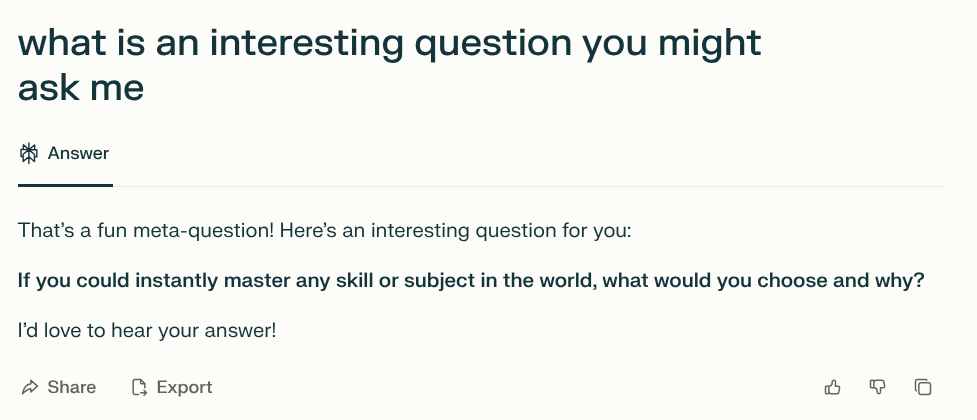

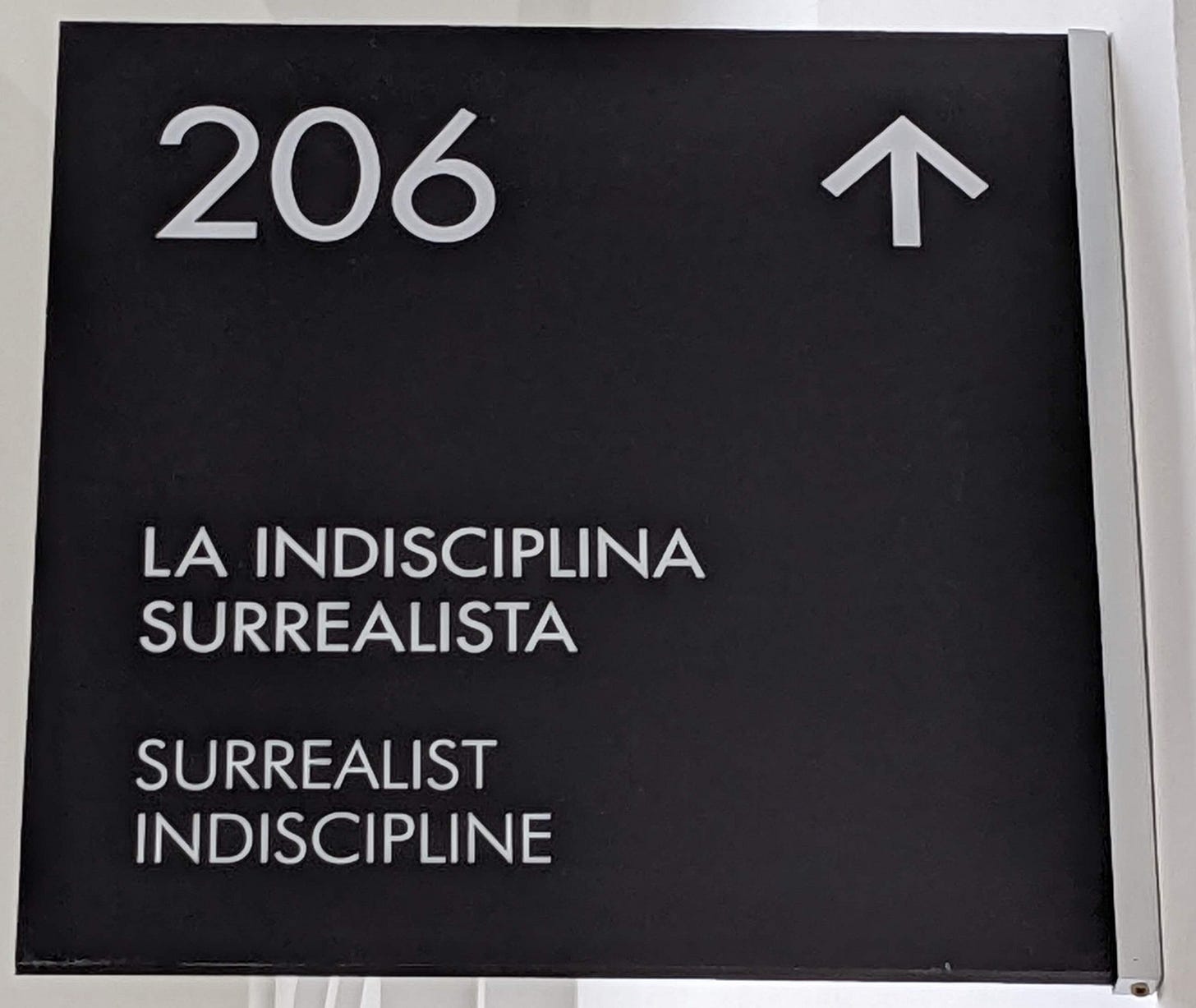

-Or maybe just, “Room 206.”

None of these seem sufficient to explain this strange phenomenon. If you have a further theory, please post it in the comments section. If you’re a tech journalist, here’s a free story you can take to the makers of LLMs.

After all, the uniformity of answers amounts to a pretty good question. For the humans who made things this way.

It’s momentarily interesting, but it very quickly dies out, or gets you into too many hypothetical scenarios to not feel like work, unless you’re talking with a very creative person.

If you’re looking for a good icebreaker at a dinner, I don’t think you can best Terry Gross’ go-to, “Tell me about yourself.” Pretty much everyone will respond well in that situation, and it goes places, either for interesting new questions or finding common conversational ground.

”What would you like to add to yourself?” almost cuts in the opposite direction, since it’s also about something the person lacks, not what they have.

As a marketing person, my gut tells me this is a ploy that has been widely aped so that users will reveal things about themselves that will help product development improve the tutorial/human assistant features and benefits of the product(s). If I were running product development for any of these companies I would manipulate prompts that encourage users to pour out their hopes, dreams, and ambitions so the agent(s) could evolve to better edify, coach, and support interactivity, productivity, and improve user satisfaction. Yesterday's "impossible superpower" is a basic feature of every smartphone app today. It is a great prompt and it is every product developer and product marketer's dream to find an unfulfilled niche particularly one that touches on deep human urges and unmet needs.

Love it and it's interesting that all of these models gave you the same output. First thing that strikes me about it, though, is that it's a very safe and corporate response. I mean, come on, an interesting question might be philosophical, personal, political, religious or sexual. What you got was a corporate icebreaker, along the lines of "if you could have any super power."

I suspect that as AI becomes more commercial you'll see a lot more of this -- fewer hallucinations, replaced by the kinds of responses that adhere to "brand safety" guidelines and that won't spook anybody who bought advertising alongside the query response.