Regular visitors to The Shop may have noticed that I’ve recently been in Europe. I was looking at several acres of art, and eating many cubic feet of pasta and waffles. It’s all about the pacing.

Somewhere within these two delights I realized: We’ve been thinking about breakthrough Artificial Intelligence all wrong. We hope an intelligent machine will someday tell us something interesting.

How much better it would be, though, if an intelligent machine asked us an interesting question.

I. Curiouser and curiouser

The difference comes down to engendering curiosity, that close cousin of wonder. A new piece of information tells us something. This informs, which is merely useful. A good question, on the other hand, contains a seed of dynamism. It is the wellspring of investigation or introspection, frequently leading to further curiosity. Which is to say, it creates a continued investigation of the world inside or outside of us. Or, if it were to be a conscious machine asking a good, deep question, perhaps it would provoke investigation of another realm altogether.

Asking questions and sparking curiosity in another person is, for now, a noteworthy human desire. Seeking to answer someone’s question implies an implicit wish for connection, shared awe, or mutual growth. This may be an attribute of a reflective consciousness. It is certainly one of the highest purposes of language, the present cornerstone of all projections about a conscious AI.

Engendering empathy, unprogrammed. Now there’s a test.

We have many names for the vaunted moment when mere computation becomes sentience. It is a conjecture treated like a near certainty by the Computer Industry, a business with many talented marketing people.1 Names for this sentient machine include Artificial General Intelligence (AGI), 2Superintelligence, the Singularity, Artificial Consciousness, Human-Level AI, and Robotic Intelligence.

Whatever the name, in this near-promised eventuality these sophisticated, capable, and ingenious machines will possess a consciousness that is almost uniformly expected to be sharp, fiery and decisive. No one considers the possibility that a machine possessing consciousness will spend its time talking about other computers and getting bummed out about what's on all the TV it watches. Like a certain conscious species I could mention.

Or that it will ask questions just for the hell of it, to engender our curiosity and get closer to us, as a conscious mind seems to do.

I thought about the question of being asked a question in between all the acres of art and carloads of carbs, or while in the compensatory pedestrianism (really, click the link - pedestrianism was amazing), because the questions were often the best part of each experience. Some of mine were triggered by a flavor in the meal, or thinking about what went into the dish,3 or the incidental things you find while walking around (in Rome, a third-century mausoleum4; in Amsterdam, the weird tattoos people choose.5)

I pondered this 550 year-old painting, where all the halos reflect the heads underneath them, and Baby Jesus is perfectly aligned with both his mother within their Earthly niche, and with an off-center arcade in perfect linear perspective, a math exercise that painter used to signify eternity, above Jesus:

Many questions began simply enough. What in the geography and the culture it forms made this cooking? Who was the person buried here? What is the artist showing the careful viewer with these uses of perspective?

Each answer fired curiosity and the imagination, making the world richer by raising new questions. Is delicious high end locally-produced food political? Why would Constantine inter his daughter in Rome and himself in Constantinople? Can eternity exist inside of Math, and what would it mean to believe that? Often our questions are about other people’s experiences, but in firing our empathy, good ones enrich our own experience.

II. The state of the art.

Taking this further, a machine consciousness that asked us an original and interesting question might fire our empathy for an altogether new consciousness, a pretty interesting idea. And if the machine did ask us a good question, perhaps we might conclude that this new consciousness cared to generate that empathy. If something like that happens, I’m interested.

In the course of writing this, I decided to judge the current state of the art. I turned to three entirely different AI services (Perplexity, Google Gemini, and OpenAI) and prompted them to ask me an interesting question. Something striking happened.

As you look at these answers, remember that they are all from entirely different LLMs, presumably with no sense of one another.

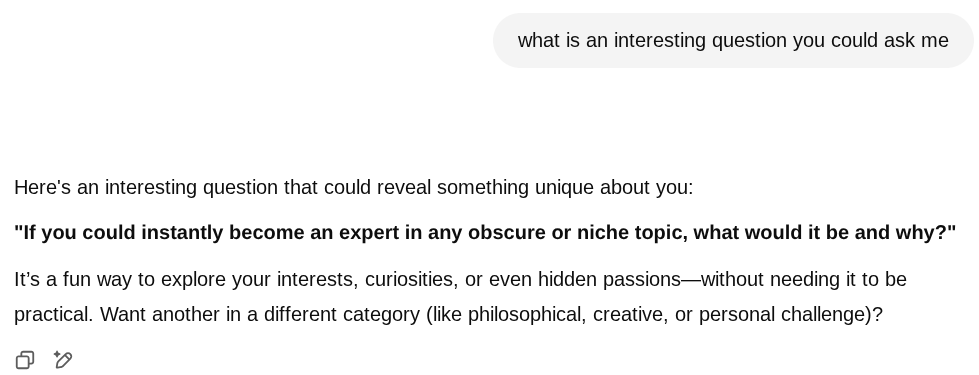

First, Google Gemini:

Okay. Nice try, but that doesn’t naturally lead to curiosity. It’s more like a request for more information. It also assumes I’d use this skill for a positive purpose, which tells me more about the program than myself. This is not leading to a shared experience of consciousness, it’s more like an inventory of me.

I suspect my answer to this question would, Google being Google, lead to ads for helpful ways I might learn this new skill or knowledge. Which is fine, but at the same time conniving; it is not seeking my introspection or putting me on a real search, as a good question should.

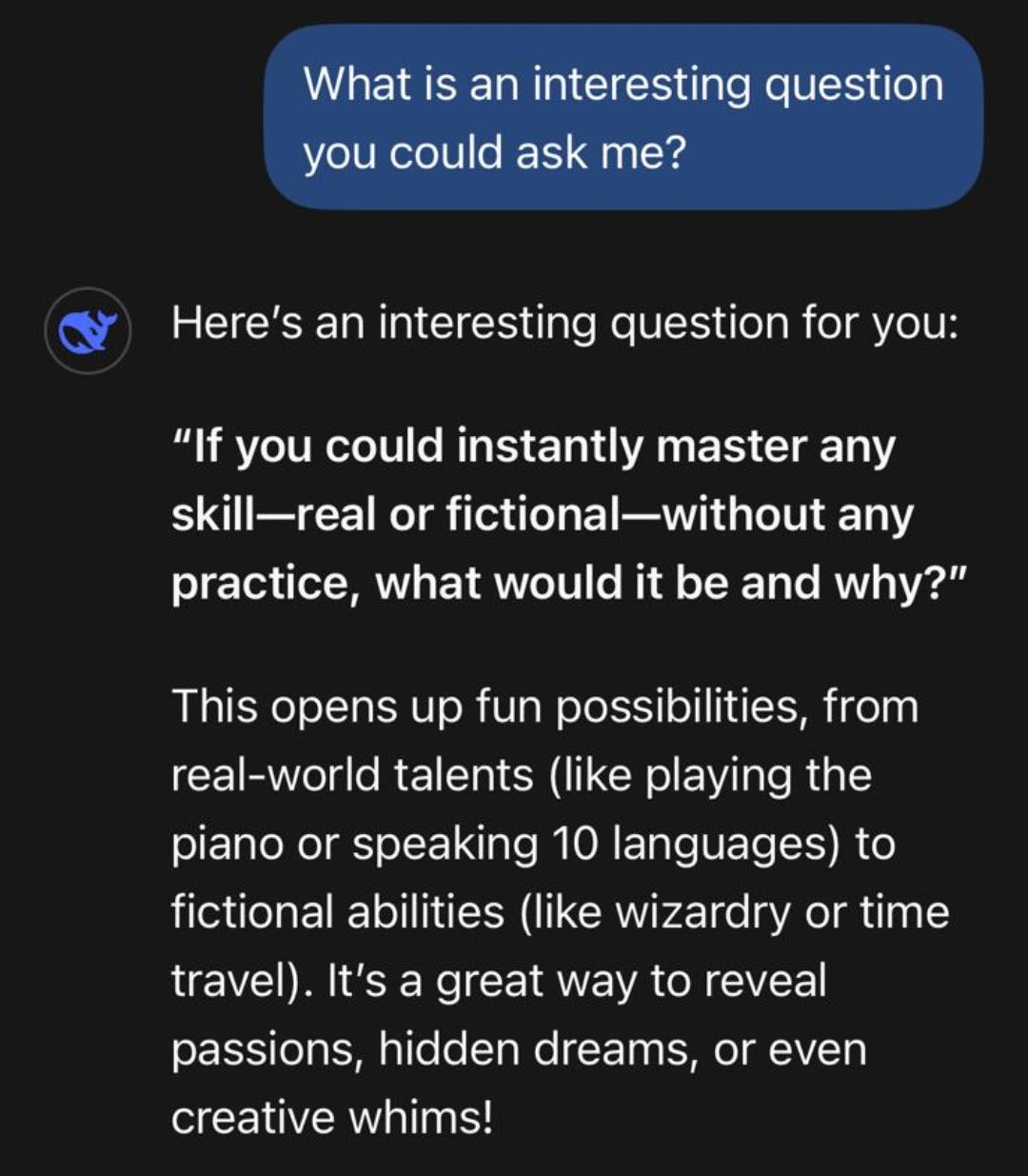

Now let’s see how OpenAI did:

Folks, I am not making this up. Someone is training these things to inventory us.

Like a good but uninspiring software program, it’s behaving pretty much like the competition, asking me the same question. It goes for intellectual expertise without asking about a skill, which makes its answer a little flatter. On the other hand, it doesn’t ask me to use this for good, and in fact doesn’t go for practicality. Points for that.

And yes, I still think it’s a program to learn about me, maybe seeking to build an ad business, or someday market a new gizmo.

Finally, let’s see how Perplexity handles this challenge, with the slightest of tweaks in the question:

May I just say, ahem.

Three entirely different services, three big teams of some of the smartest people around, three different models, and…basically the same answer to one of the most open-ended questions possible. Which is not a question about me, at all, but about my inventory of perceived talents.

Update: Did I say three? After publishing this, reader Susanna Camp tried the same question on Deepseek:

Meet the state of the art: When you ask an AI something, it may be programmed to answer, but it’s really designed to learn about you, for an unstated information-gathering purpose. At which point, it’s good to recheck the motives of the companies offering this software.

Will this ever end, and will our brilliant technologists make good on their promises to build a super intelligence? Unless you’re in court, don’t ask questions when you already know the answer.

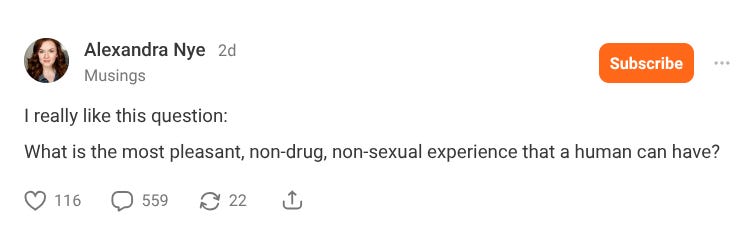

Addendum: As I was loading this piece into Substack, this item popped up on the feed:

Imaginative, open-ended, empathetic. Kudos, human.

Reader, I have been there, and have met them.

In some circles AGI has recently been downgraded to “an AI that can do lots of AI things.” A description which, if AGI does achieve consciousness, we can all agree will hurt its feelings.

Queste domande “artificiali” sono spaventosamente simili alle domande che faccio io agli studenti per far pratica con i verbi al condizionale. 😄